Spelunking in the Microsoft API, Part II: The Truth About Risky Sign-in Alerts

How accurate are Microsoft's native security signals? (Not very.)

Intro

Our mission at Petra is to have the fastest and most accurate detection system for Microsoft cloud environments.

Following Part I of our Microsoft API Latency series last week, a few of our partners have asked us about Microsoft's risky sign-in alerts. We figured we would pull a month of risky sign-in data (11/15 - 12/15) from our tenants with Microsoft premium licenses and do a quick investigation.

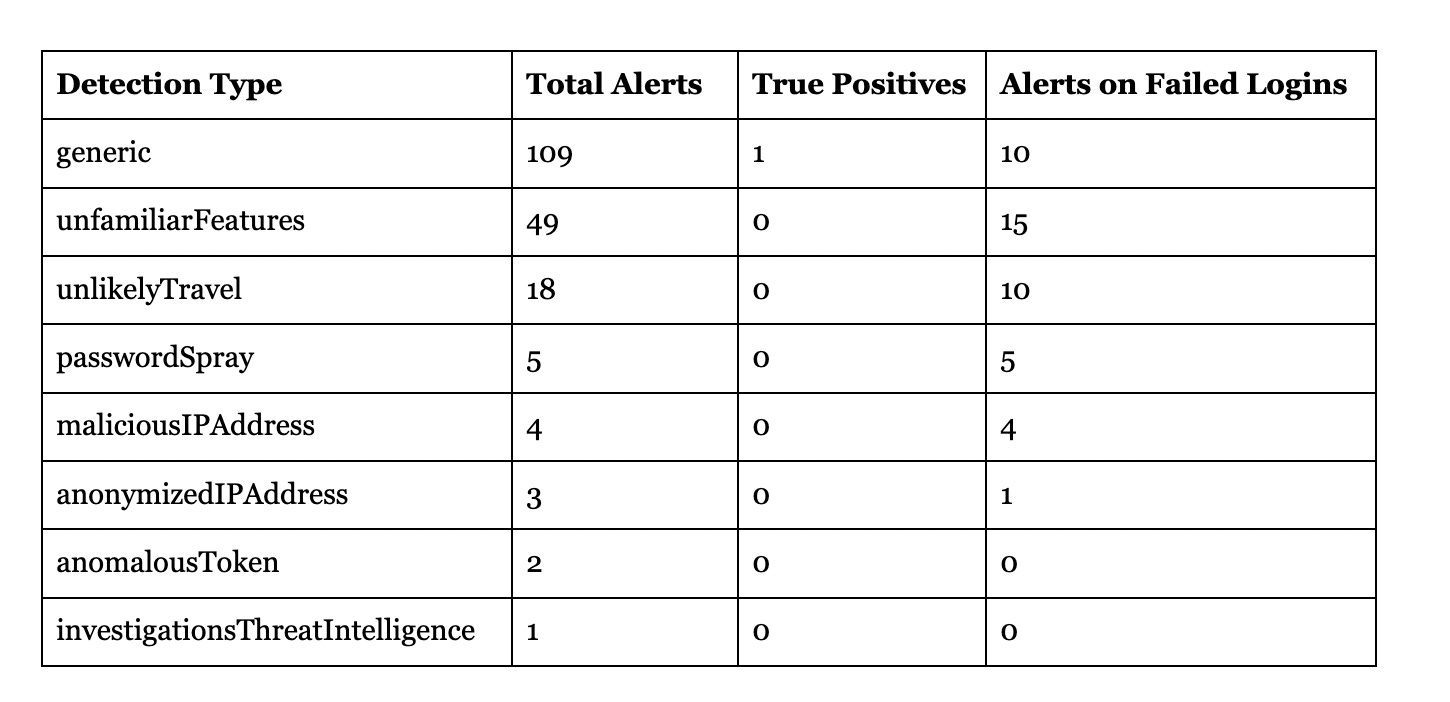

Across a sample of our tenants, Microsoft's built-in detections generated 191 risky sign-in alerts in 30 days. Here's what we found when we dug into the data.

Detection Delays: Hours to Days

Microsoft has two types of risky sign-in detections: real-time and offline.

In last week’s deep dive on log latency, we found that most login logs appear within 5-7 minutes, but a small portion are delayed for hours or days.

Delays on these 29 offline risky sign-ins are much worse.

Note: Latencies for each risky sign-in calculated as detectedDateTime -activityDateTime

Note what’s happening here:

More than half of all Microsoft Risky Sign-ins come in 7.5 hours after an attacker may have compromised an employee account. Many more come in 2+ full days after potential account compromise.

This seems to track with what other teams are seeing - Invictus IR recently documented an anomalous token alert that took 12 hours to surface after the attack.

Put another way: by the time Microsoft's system alerts on a potential attack, the compromise could be days old. By the time you investigate, the attacker may have already performed lateral movement or data exfiltration in your environment.

Unfortunately, latency is only half the problem here. The other half is noise.

Precision Problems: 99% Noise

Fortunately, because we’ve been looking for attacks across these tenants in real-time, we can measure exactly how Microsoft's alerts perform. Here's the breakdown:

If you're relying on Microsoft’s Risky Sign-in Alerts, these numbers should worry you.

Microsoft’s alerts have just a 0.5% precision rate (1 out of 191). If you rely on these, each of these could be 15-20 min wasted. Conservatively, that’s 47 hours a month of triage.

Worse, many of these alerts aren't actionable.

Take the passwordSpray detection – all 5 alerts were for failed logins. Because they were failed, they posed no actual risk.

These events are often coming from a different location than Microsoft reports. The geolocation accuracy of Microsoft’s alerts is particularly concerning. In one case, we saw an unlikelyTravel alert triggered for a California-based user whose IPv6 login from Los Angeles was incorrectly placed in Philadelphia. This isn't an isolated incident – 4 out of 18 unlikelyTravel alerts had completely wrong locations.

Slow, noisy alerts with questionable location data are certainly not ideal.

Usually, this trade-off comes with a benefit: it doesn’t miss any attacks.

Unfortunately, we found the opposite conclusion during our research.

Biggest Blindspot: Missing Most Attacks

During the one month period of 11/15 - 12/15, Petra’s system caught 6 confirmed attacks across these tenants.

Of Microsoft’s 191 alerts, they caught just 1/6.

These aren't just statistics. They represent real security incidents where attackers could have gained persistent access to sensitive environments while defensive teams were busy chasing false positives.

Honestly, this result completely caught me off guard. I knew Microsoft’s alerts were noisy, but the missed attacks are a much more serious problem.

The Bottom Line for Security Teams

If you're relying on Microsoft's risky sign-in detections, you're facing a perfect storm of security challenges:

Your team is drowning in false positives

You're missing real attacks

Even when you do get an alert, it's often too late by several hours

For comparison, our system at Petra caught all but one of these attacks (with just a single false positive), and we did it within minutes of the events occurring. This isn't meant to be a sales pitch – it's just validation that better detection is possible.

If you've had similar experiences with Microsoft's security alerts, I’d love to hear from you. I'd especially love to hear from teams who've found creative ways to work around these limitations.

Until next time,

Adithya