Spelunking in the Microsoft API, Part I: Entra ID Latency

One of the most important and least understood factors for building ML systems using Entra ID Login Events

Intro

At Petra, as we’ve been building the next generation of our real-time detection system, we've been working closely with the Microsoft Office 365 Management API.

Because our system pulls logs from the API once a minute (across ALL tenants), we have a unique window into the API's latency.

There isn't much public documentation about real-world performance on Microsoft’s API, so we had to go spelunking for ourselves. We did this as part of a larger effort to improve the accuracy of our system and cover the gaps introduced by latencies.

Here’s what Microsoft doesn’t tell you about the time gap between when events occur and when they actually become available.

—

This will be the first post in an ongoing series closely examining Microsoft's API latencies.

Today we'll focus on Entra ID login events, and we'll look at Exchange, SharePoint and Teams logs in follow-up posts.

Quick note on methodology: I’m measuring latency as the delta between an event's creationTime (as reported by Microsoft) and when our system actually ingests it. Since we poll the API every minute, all numbers have a ~1 minute margin of error. Not perfect, but good enough to spot interesting patterns.

Let's dive into what I found from analyzing 72 hours of data (Nov 27-30), covering 51,458 login events across 2,364 users.

—

How latency changes throughout a day

If we pull the data over the course of a given 24-hour period, the first thing we notice is that latencies consistently peak between 22:00-10:00 UTC––exactly when our US-based users are least active.

That’s weird - what’s going on?

Theory #1 is that this could be related to global Microsoft 365 traffic patterns. 22:00-10:00 UTC lines up with business hours across Asia and Europe.

All percentile bands show this pattern, though it's most pronounced in the p95, where latencies jump from 6-7 minutes during US hours to 8-10 minutes during this global peak.

Put another way - the API’s worst-case performance during this period gets even worse.

A follow-up question: are latencies different between failed and successful logins?

Across the 51,458 logins we analyzed, there were 26,040 successful and 25,418 failed logins. Let’s compare their latencies:

Looking at the breakdown, we see that successful and failed logins have roughly the same latency.

Even during the peak latency periods we identified earlier, both types of logins have roughly the same spikes. This suggests that, regardless of whether a login succeeds or fails, it hits the API at roughly the same speed.

This is somewhat surprising; one would expect failed logins, especially those involving additional authentication steps or lockouts, to take longer to process. Looks like the data doesn't support that assumption, at least not for the majority of events.

The worst case is really bad.

You may notice I haven’t included more tail statistics in the charts above, like the p99 and above.

This is because these worst-case stats end up severely skewing the y-axis. Put another way: the worst case is really bad.

Let’s look at the aggregate distribution over our 72 hour window:

The typical case actually isn't too bad - most events show up within about 5 minutes, with 90% arriving within 6.5 minutes.

That median latency of 4.42 minutes is pretty consistent, as shown by the identical p25 and p50 numbers.

This means that the vast majority of the time, we can detect the bad guys within 10 minutes of the unauthorized access. Not terrible, especially for those without a premium Microsoft license.

However, the tail-end latencies––the worst-cases of the API—are where things get really interesting.

While 95% of events arrive within a reasonable 7.4 minutes, the distribution has a dramatic long tail. There's a substantial jump between p95 (7.38 minutes) and p99 (12.49 minutes), but the real shock comes after that: at p99.5, we're suddenly looking at 4 hours of latency. By p99.9, events are taking over 25 hours to appear. And in the most extreme case we observed, there was a delay of over 6 days!

This raises some important questions. What's happening with these severely delayed events? Are they random, or is there a pattern? Are certain types of login events more likely to be delayed? And most importantly - can we identify characteristics that might help us predict or at least understand when an event might fall into this long tail?

For our use-case of detecting account compromise, this is critically important. A 6-day delayed log could be an opportunity for an attacker to set up and maintain persistence. So, we have to figure out how to solve it.

Understanding the Long Tail of Latency

Let’s zoom in on this 99th percentile––the worst of the worst cases––and revisit the question of how latencies differ when a login succeeds or fails.

An interesting skew pops out in these severely delayed events (>12.5 minutes latency).

Of the 485 login events in this p99+ range, 215 were successful and 270 were failed logins. That's a 55% / 45% split favoring failed logins in our long-tail events, compared to a nearly even 51% / 49% split in our overall dataset.

Digging into the graph reveals another pattern: for events delayed between 10-60 minutes, we see a fairly even distribution between successful and failed logins.

As the latency increases beyond an hour, however, failed logins start to dominate. This suggests that whatever is causing these extreme delays is more likely to affect failed login events.

This naturally leads us to the next question - what kinds of failed logins are we seeing in these delayed events?

Let's look at the error codes:

It looks like the overwhelming majority of our severely delayed failed logins (195 out of 270) have the error code IdsLocked.

Here’s what the Microsoft API docs have to say about this error code:

“The account is locked because the user tried to sign in too many times with an incorrect user ID or password. The user is blocked due to repeated sign-in attempts. See Remediate risks and unblock users.”

Or, “sign-in was blocked because it came from an IP address with malicious activity.”

This isn’t too helpful, so let’s keep digging.

Case Study: 1 User, 21 Delayed Logins

Breaking down these 485 severely delayed logins by user, we find:

264 distinct users with severely delayed logins

12 users with more than 5 severely delayed logins

4 users with more than 10 severely delayed logins

One user with more than 21 severely delayed logins

Time to figure out what’s going on with that poor user …

All 21 of these severely delayed logins are failed and have the failure code IdsLocked.

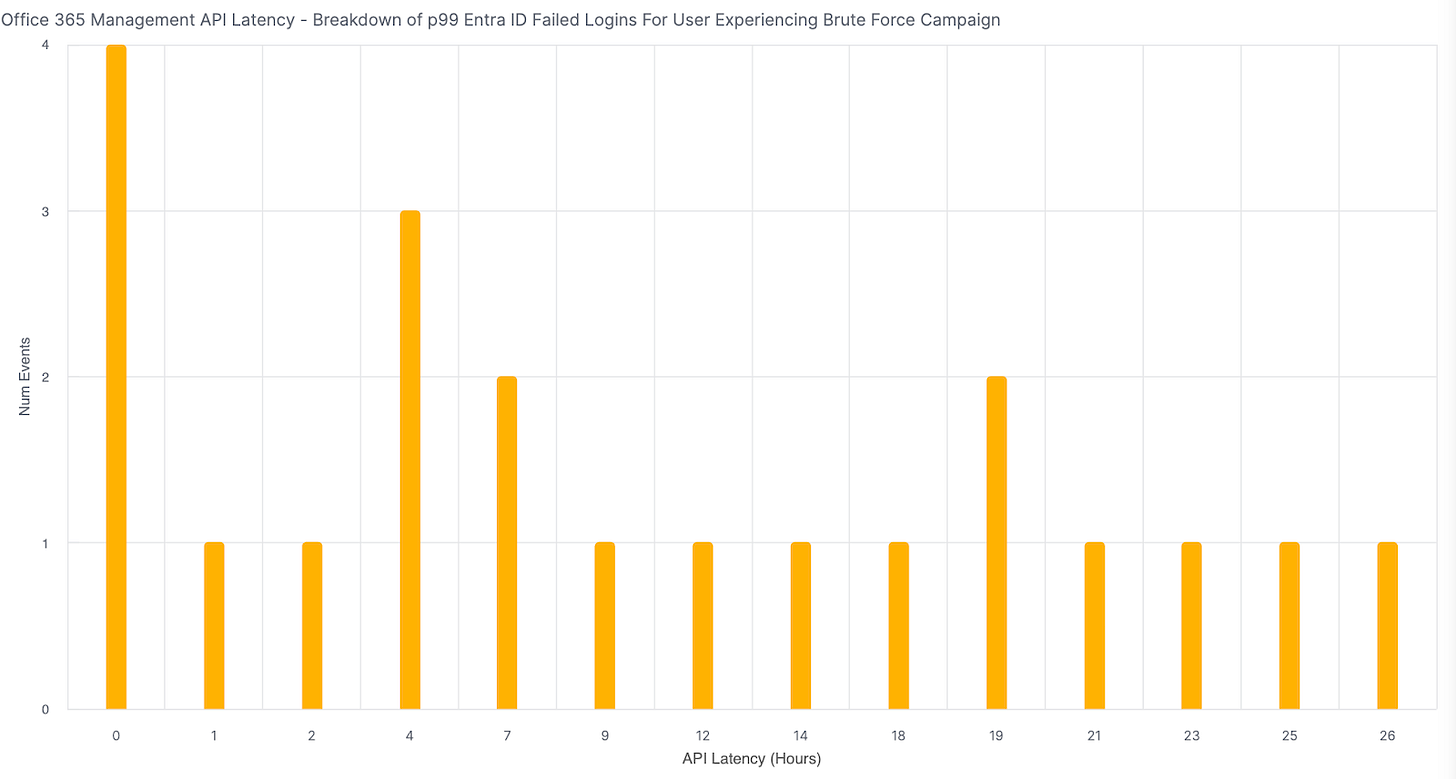

Here’s a breakdown of their latency:

Interestingly, instead of arriving at similar delays, we see distinct clusters.

A large group of these severely delayed logs arrive around the 0-1 hour mark, another cluster around 4 hours, and then sporadic events stretching out to 26 hours.

When we break these down by location, something really interesting pops up:

It looks like there’s a significant geographic spread of these failed login attempts - while there's a concentration from China (10 events), we're seeing attempts across multiple regions including the US, UK, India, and various European countries.

This smells a lot like brute force. Most modern brute force campaigns rotate through global IP addresses to avoid detection.

Given that these severely delayed logins are likely part of a brute force campaign, it becomes clear that most of these IdsLocked failed logins are actually due to the latter of the two possible reasons that Microsoft highlights in their docs: “sign-in was blocked because it came from an IP address with malicious activity.”

What could be happening on Microsoft’s end to create this "bursty" pattern of delayed events? Why are they coming from all these different geographies? Here are my three theories:

Microsoft might be doing additional processing on these events, maybe cross-referencing with their threat intelligence systems to identify malicious IPs or patterns.

The lockout events themselves could be batched or throttled differently than normal authentication events, especially if they're coming from IPs that Microsoft has flagged as suspicious.

There might be some internal queuing mechanism that prioritizes successful logins over security-related failures, explaining why the lockouts consistently show up later.

Wrapping up

If you’re working in Microsoft detection engineering, there are three things you should take away from this:

For most use cases, you can expect login events to appear within 5-7 minutes. This "normal" latency is fairly consistent and predictable.

Global traffic patterns matter - expect slightly higher latencies (2-3 minutes more) during peak business hours across Asia and Europe, even for US-based users.

The long tail (the worst-case) is where things get interesting. Only 1% of events take longer than 12.5 minutes to appear, but, these delayed events are predictable and distinct. Most of them seem to be from IPs that Microsoft suspects as being malicious.

For our future posts in this series, we'll look at similar patterns in Exchange, SharePoint, and Teams logs.

If you've observed other interesting patterns in the O365 Management API or have any specific questions you’d like me to answer in the next blog, I'd love to hear about them in the comments.

Catch you later,

Adithya